With Friends Like These, Who Needs Adversaries?

This work performs a principled analysis of the class decision functions learned by classification CNNs by contextualising an existing geometrical framework for network decision boundary analysis.

Our research uncovers some very intriguing yet simplistic facets of the class score functions learned by these networks that explain their adversarial vulnerability.

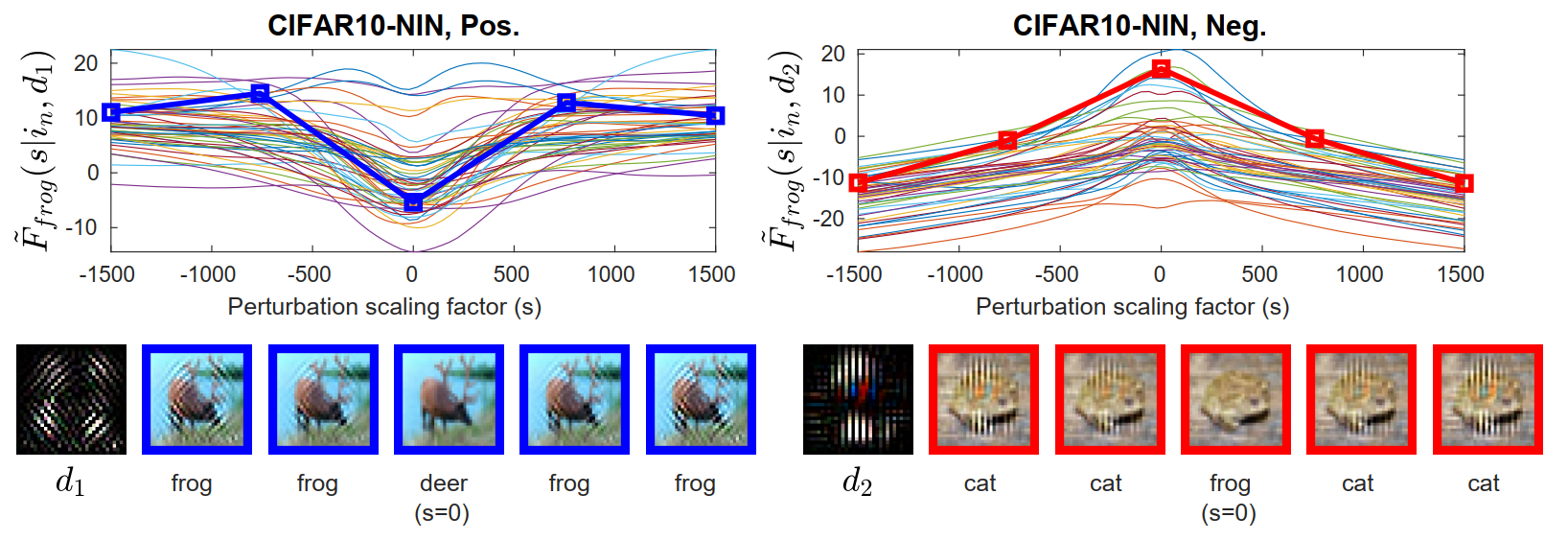

We identify the fact that specific input image space directions tend to be associated with fixed class identities.

This means that simply increasing the magnitude of correlation between the input image and a single image space direction causes the nets to believe that more (or less) of the class is present.

This allows us to provide a new perspective on the existence of universal adversarial perturbations.

Further, the input image space directions which the networks use to achieve their classification performance are the same along which the networks are most vulnerable to attack; the vulnerability arises from the rather simplistic non-linear use of the directions. Thus, as it stands, the performance and vulnerability of these nets are closely entwined.

Various notable observations emerge from this, one of which is that any attempt to make a trained network more robust to an adversarial attack by suppressing input data dimensions or intermediate network features would always be accompanied by a corresponding loss in network accuracy.

Moreover, if the attack is optimised to take into account the suppression of dimensions, it regains its effectiveness.

These results present key implications for future efforts to construct neural nets that are both accurate and robust to adversarial attack.

[paper]

[code]

[video]

[recommendation]